This article shows how to setup and use a custom analyzer in Elasticsearch using ElasticsearchCRUD. An analyzer with a custom Synonym Token Filter is created and added to the index. If you search for any of the synonyms, you will find all the hits for all the possible texts.

Code: https://github.com/damienbod/ElasticsearchSynonymAnalyzer

Other Tutorials:

Part 1: ElasticsearchCRUD introduction

Part 2: MVC application search with simple documents using autocomplete, jQuery and jTable

Part 3: MVC Elasticsearch CRUD with nested documents

Part 4: Data Transfer from MS SQL Server using Entity Framework to Elasticsearch

Part 5: MVC Elasticsearch with child, parent documents

Part 6: MVC application with Entity Framework and Elasticsearch

Part 7: Live Reindex in Elasticsearch

Part 8: CSV export using Elasticsearch and Web API

Part 9: Elasticsearch Parent, Child, Grandchild Documents and Routing

Part 10: Elasticsearch Type mappings with ElasticsearchCRUD

Part 11: Elasticsearch Synonym Analyzer using ElasticsearchCRUD

Part 12: Using Elasticsearch German Analyzer

Part 13: MVC google maps search using Elasticsearch

Part 14: Search Queries and Filters with ElasticsearchCRUD

Part 15: Elasticsearch Bulk Insert

Part 16: Elasticsearch Aggregations With ElasticsearchCRUD

Part 17: Searching Multiple Indices and Types in Elasticsearch

Part 18: MVC searching with Elasticsearch Highlighting

Part 19: Index Warmers with ElasticsearchCRUD

Creating a custom Synonym Analyzer

Creating an index with custom analyzers, filters or tokenizers is very easy to do in ElasticsearchCRUD. Strongly typed class configuration is available for all types as well as const definitions for all the default possibilities. In the example below, a SynonymTokenFilter is created and added to the custom analyzer. This is then included in the index mappings along with other configurations.

new IndexDefinition

{

IndexSettings =

{

Analysis = new Analysis

{

Analyzer =

{

Analyzers = new List<AnalyzerBase>

{

new CustomAnalyzer("john_analyzer")

{

Tokenizer = DefaultTokenizers.Whitespace,

Filter = new List<string> {DefaultTokenFilters.Lowercase, "john_synonym"}

}

}

},

Filters =

{

CustomFilters = new List<AnalysisFilterBase>

{

new SynonymTokenFilter("john_synonym")

{

Synonyms = new List<string>

{

"sean => john, sean, séan",

"séan => john, sean, séan",

"johny => john",

}

}

}

}

},

NumberOfShards = 3,

NumberOfReplicas = 1

},

};

The index settings is created as follows in Elasticsearch:

http://localhost:9200/_settings

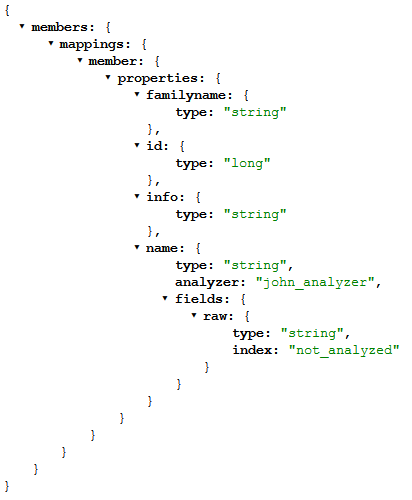

Creating the mapping for anaylzed and non-analyzed

Now a mapping is created for the member type using the Member class to map this. The Name property uses the custom analyzer and also saves the original text in a non-analyzed field using the Fields configuration.

public class Member

{

public long Id { get; set; }

[ElasticsearchString(Index = StringIndex.analyzed, Analyzer="john_analyzer", Fields = typeof(FieldDataDefinition))]

public string Name { get; set; }

public string FamilyName { get; set; }

public string Info { get; set; }

}

public class FieldDataDefinition

{

[ElasticsearchString(Index=StringIndex.not_analyzed)]

public string Raw { get; set; }

}

The index with mappings and settings is created using the following method:

_context.IndexCreate<Member>(indexDefinition);

This is then created as follows:

http://localhost:9200/_mapping

Adding some data

Now some data can be added to the index using a bulk insert.

public void CreateSomeMembers()

{

var jm = new Member {Id = 1, FamilyName = "Moore", Info = "In the club since 1976", Name = "John"};

_context.AddUpdateDocument(jm, jm.Id);

var jj = new Member { Id = 2, FamilyName = "Jones", Info = "A great help for the background staff", Name = "Johny" };

_context.AddUpdateDocument(jj, jj.Id);

var pm = new Member { Id = 3, FamilyName = "Murphy", Info = "Likes to take control", Name = "Paul" };

_context.AddUpdateDocument(pm, pm.Id);

var sm = new Member { Id = 4, FamilyName = "McGurk", Info = "Fresh and fit", Name = "Séan" };

_context.AddUpdateDocument(sm, sm.Id);

var sob = new Member { Id = 5, FamilyName = "O'Brien", Info = "Not much use, bit of a problem", Name = "Sean" };

_context.AddUpdateDocument(sob, sob.Id);

var tmc = new Member { Id = 5, FamilyName = "McCauley", Info = "Couldn't ask for anyone better", Name = "Tadhg" };

_context.AddUpdateDocument(tmc, tmc.Id);

_context.SaveChanges();

}

Search using the analyzed data

The data can be searched for using the query match as we want to search the analyzed fields. If you search for sean, séan, Sean, Séan, John, Johny, all john results will be found.

//{

// "query": {

// "match": {"name": "sean"}

// }

// }

//}

public SearchResult<Member> Search(string name)

{

var query = "{ \"query\": { \"match\": {\"name\": \""+ name + "\"} } } }";

return _context.Search<Member>(query).PayloadResult;

}

The request is sent as follows:

POST http://localhost:9200/members/member/_search HTTP/1.1

Content-Type: application/json

Host: localhost:9200

Content-Length: 46

Expect: 100-continue

Connection: Keep-Alive

{ "query": { "match": {"name": "Johny"} } } }

You can also inspect the analyzed tokens using the _analyze API

http://localhost:9200/members/_analyze?&analyzer=john_analyzer

Links:

https://www.nuget.org/packages/ElasticsearchCRUD/

http://www.elasticsearch.org/guide/en/elasticsearch/guide/current/using-synonyms.html

http://www.elasticsearch.org/guide/en/elasticsearch/guide/current/multi-word-synonyms.html

http://www.elasticsearch.org/guide/en/elasticsearch/guide/current/synonyms.html

http://stackoverflow.com/questions/18405779/how-to-configure-the-synonyms-path-in-elasticsearch

http://www.elasticsearch.org/guide/en/elasticsearch/reference/current/indices-analyze.html

https://fuzzyquery.wordpress.com/2013/08/15/example-shingle-filter-mapping/

http://www.elasticsearch.org/guide/en/elasticsearch/guide/current/using-stopwords.html

https://blog.codecentric.de/en/2014/05/elasticsearch-indexing-performance-cheatsheet/

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| # Analyze text: "the <b>quick</b> bröwn <img src="fox"/> "jumped"" | |

| curl -XPUT 'http://127.0.0.1:9200/foo/' -d ' | |

| { | |

| "index" : { | |

| "analysis" : { | |

| "analyzer" : { | |

| "test_1" : { | |

| "char_filter" : [ | |

| "html_strip" | |

| ], | |

| "tokenizer" : "standard" | |

| }, | |

| "test_2" : { | |

| "filter" : [ | |

| "standard", | |

| "lowercase", | |

| "stop", | |

| "asciifolding" | |

| ], | |

| "char_filter" : [ | |

| "html_strip" | |

| ], | |

| "tokenizer" : "standard" | |

| } | |

| } | |

| } | |

| } | |

| } | |

| ' | |

| curl -XGET 'http://127.0.0.1:9200/foo/_analyze?format=text&text=the+%3Cb%3Equick%3C%2Fb%3E+br%C3%B6wn+%3Cimg+src%3D%22fox%22%2F%3E+%26quot%3Bjumped%26quot%3B&analyzer=standard' | |

| # "tokens" : "[b:5->6:<ALPHANUM>] | |

| # | |

| # 3: | |

| # [quick:7->12:<ALPHANUM>] | |

| # | |

| # 4: | |

| # [b:14->15:<ALPHANUM>] | |

| # | |

| # 5: | |

| # [bröwn:17->22:<ALPHANUM>] | |

| # | |

| # 6: | |

| # [img:24->27:<ALPHANUM>] | |

| # | |

| # 7: | |

| # [src:28->31:<ALPHANUM>] | |

| # | |

| # 8: | |

| # [fox:33->36:<ALPHANUM>] | |

| # | |

| # 9: | |

| # [quot:41->45:<ALPHANUM>] | |

| # | |

| # 10: | |

| # [jumped":46->57:<COMPANY>] | |

| # " | |

| # } | |

| curl -XGET 'http://127.0.0.1:9200/foo/_analyze?format=text&text=the+%3Cb%3Equick%3C%2Fb%3E+br%C3%B6wn+%3Cimg+src%3D%22fox%22%2F%3E+%26quot%3Bjumped%26quot%3B&analyzer=test_1' | |

| # { | |

| # "tokens" : "[the:0->3:<ALPHANUM>] | |

| # | |

| # 2: | |

| # [quick:7->12:<ALPHANUM>] | |

| # | |

| # 3: | |

| # [bröwn:17->22:<ALPHANUM>] | |

| # | |

| # 4: | |

| # [jumped:46->52:<ALPHANUM>] | |

| # " | |

| # } | |

| curl -XGET 'http://127.0.0.1:9200/foo/_analyze?format=text&text=the+%3Cb%3Equick%3C%2Fb%3E+br%C3%B6wn+%3Cimg+src%3D%22fox%22%2F%3E+%26quot%3Bjumped%26quot%3B&analyzer=test_2' | |

| # { | |

| # "tokens" : "[quick:7->12:<ALPHANUM>] | |

| # | |

| # 3: | |

| # [brown:17->22:<ALPHANUM>] | |

| # | |

| # 4: | |

| # [jumped:46->52:<ALPHANUM>] | |

| # " | |

| # } | |

Reblogged this on Dinesh Ram Kali..